Center of Excellence Security - Artificial Intelligence / LLM Penetration Testing

AI & LLM Penetration Testing

Secure your AI with our AI/LLM Pen Testing. We find vulnerabilities in your AI models and large language systems, protecting your innovations and data.

Artificial Intelligence / LLM Penetration Testing at COE Security

At COE Security, our Artificial Intelligence (AI) and Large Language Model (LLM) Penetration Testing service focuses on identifying vulnerabilities and risks within AI models and systems, including LLMs like GPT, BERT, and other AI-driven technologies. As AI systems and LLMs become more integrated into business processes, they pose unique security challenges. The complex nature of AI models, along with their reliance on vast datasets and intricate algorithms, makes them susceptible to a variety of attacks ranging from adversarial inputs and data poisoning to model inversion and privacy risks.

Our penetration testing service for AI and LLMs simulates potential attack vectors to uncover weaknesses and flaws in your AI models, APIs, training data, and deployment environments. This proactive approach allows you to assess the robustness of your AI systems, ensuring that they are secure, reliable, and resistant to manipulation or misuse by malicious actors.

Our Approach

Define scope and AI components: Identify LLMs, APIs, data pipelines, and integrations subject to testing across training and inference layers.

Enumerate attack surfaces and inputs: Map user inputs, plugins, prompts, and APIs used to interface with the AI system or model.

Evaluate prompt injection and manipulation: Test for jailbreaks, prompt leaking, role confusion, and output manipulation through crafted input payloads.

Test model output filtering and alignment: Validate whether safety controls prevent toxic, biased, or harmful outputs in adversarial input conditions.

Assess training data exposure risks: Probe for unintended memorization, sensitive data leakage, and training data inversion through generative outputs.

Probe for plugin and API abuse: Simulate malicious use or chaining of third-party plugins, APIs, or external functions for unauthorized access.

Inspect authentication and session control: Evaluate token handling, session isolation, and misuse of identity in AI-integrated user workflows.

Analyze model behavior under adversarial input: Submit edge-case or malicious inputs to test robustness, hallucination frequency, and error handling logic.

Review logging, telemetry, and observability: Check for secure handling of logs, prompt records, and telemetry to avoid unintended data disclosures.

Report findings and provide recommendations: Deliver actionable findings, impact analysis, and tailored mitigation strategies aligned with AI risk frameworks.

Model Vulnerability Assessment

Data Security and Privacy

API and Integration Security

Deployment and Environment Security

Our Testing Process

Our established methodology delivers comprehensive testing and actionable recommendations.

Analyze

Threat Model

Passive/Active Testing

Exploitation

Reporting

Why Choose COE Security’s AI / LLM Penetration Testing?

Specialized expertise in LLM security: We understand the nuances of AI-specific threats like prompt injection and data leakage.

Full-stack AI attack simulations: Tests span prompts, plugins, APIs, models, and user interactions not just model-level probing.

Alignment with emerging AI standards: Our methodology reflects NIST AI RMF, OWASP LLM Top 10, and industry risk principles.

Red-teaming inspired approach: Simulate realistic adversarial behavior, including social engineering and chained plugin attacks.

Data exposure and memorization testing: Identify if your LLM leaks sensitive or proprietary training data during outputs

Secure integration verification: Assess how your LLM interacts with plugins, APIs, and user sessions across the application.

Privacy, ethics, and alignment checks: Evaluate compliance with organizational safety, privacy, and model behavior policies.

Actionable, technical remediation guidance: Fix vulnerabilities with step-by-step help tailored to your AI stack and usage.

Post-mitigation retesting and validation: We ensure your fixes are effective and risks are fully addressed post-remediation.

Trusted by AI innovators and enterprises: Proven success with startups, research labs, and AI-integrated business platforms.

Five areas of AI & LLM Penetration Testing

Internet of Things (IoT)

Cloud Security / Penetration Testing

Cloud security is a vital discipline focused on safeguarding data, applications, and infrastructure within cloud environments. It encompasses a broad range of practices and technologies designed to protect cloud-based systems from internal and external threats. This includes securing data storage, managing access controls, monitoring for unauthorized activities, and ensuring compliance with industry standards. Cloud security assessments involve evaluating the configuration of cloud services, identifying misconfigurations, and testing identity and access management (IAM) policies to detect potential weaknesses. By implementing robust cloud security measures, organizations can maintain the confidentiality, integrity, and availability of their cloud assets, ensuring secure and resilient operations across public, private, and hybrid cloud infrastructures.

Application Penetration Testing

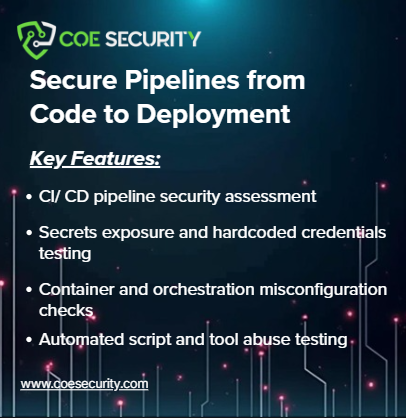

DevOps Security Testing

Firmware Security

Advanced Offensive Security Solutions

Why Partner With COE Security?

Your trusted ally in uncovering risks, strengthening defenses, and driving innovation securely.

Expert Team

Certified cybersecurity professionals you can trust.

Standards-Based Approach

Testing aligned with OWASP, SANS, and NIST.

Actionable Insights

Clear reports with practical remediation steps.

Our Products Expertise

Information Security Blog

AI Meets Application Security: Claude Code Security Brings Automated Vulnerability Detection to Developers

Artificial intelligence continues to reshape software development, and the latest advancement comes…

When AI Creates Passwords: Convenience Turning Into a Security Risk

Large Language Models are rapidly becoming part of everyday workflows, helping users…

Extended Data Exposure Incident Highlights Growing Risks in Financial Platforms

A recent security incident involving PayPal has brought renewed attention to data protection challenges…