Center of Excellence Security - AI Security Posture Assessment

Defend Your AI Ecosystem with Cutting-Edge Security!

Evaluate, strengthen, and secure your AI systems with our specialized assessment services.

AI Security Posture Assessment at COE Security

At COE Security, we offer specialized AI Security Posture Assessments to help organizations secure their artificial intelligence and machine learning systems against emerging threats. As AI technologies become more deeply embedded in business operations, protecting these systems from data manipulation, model poisoning, adversarial inputs, and unauthorized access is essential.

Our assessments focus on evaluating the entire AI/ML lifecycle – from data ingestion and model training to deployment and inference. We analyze the confidentiality, integrity, and availability of AI systems while ensuring they comply with ethical guidelines, regulatory frameworks, and security best practices.

By identifying vulnerabilities specific to AI systems and recommending tailored security measures, COE Security helps organizations build trust in their AI models and ensure their resilience against both conventional and AI-specific threats

Our Approach

Define AI Asset Inventory and Scope: Identify all AI models, pipelines, datasets, and integrations to determine the full assessment coverage and business impact areas.

Map Threat Landscape for AI Systems: Analyze threat vectors including data poisoning, model theft, adversarial inputs, and supply chain risks relevant to each AI asset.

Evaluate Data Pipeline Security: Assess the integrity, confidentiality, and traceability of training and inference data across internal and third-party sources.

Assess Model Robustness and Behavior: Test AI models for resistance to adversarial inputs, logic corruption, evasion, and model inversion attacks.

Review Model Deployment and Access Controls: Examine how models are hosted, versioned, and accessed, ensuring proper authentication, authorization, and audit logging.

Analyze ML Infrastructure and APIs: Evaluate the security of the ML stack including containers, orchestration tools, endpoints, and exposed APIs.

Check AI Supply Chain Dependencies: Identify and assess open-source models, libraries, and pre-trained components for backdoors or unverified code risks.

Conduct AI-Specific Penetration Testing: Simulate targeted attacks against AI inputs, outputs, and interfaces to uncover exploitable weaknesses.

Benchmark Against AI Security Frameworks: Map findings to emerging standards such as NIST AI RMF, OWASP LLM Top 10, and ISO/IEC 42001 for compliance and maturity.

Deliver Remediation Plan and Roadmap: Provide detailed recommendations for improving model lifecycle security, monitoring, and governance controls.

Model Security Evaluation

Data Pipeline Integrity

Compliance & Ethics Review

Integration & Interface Analysis

AI Security Posture Assessment Process

Assess

Analyze

Report

Remediate

Monitor

Why Choose COE Security’s AI Security Posture Assessment?

End-to-End AI Risk Coverage: We assess data, models, APIs, and infrastructure to provide a complete view of your AI threat landscape.

Expertise in Adversarial ML: Our specialists simulate cutting-edge threats like evasion, extraction, and poisoning to test model resilience.

Customized for Your AI Use Cases: We tailor assessments to your industry-specific AI applications whether in finance, healthcare, or SaaS.

Security for Model and Data Pipelines: We evaluate AI at every stage, from training data ingestion to real-time inference outputs.

Focus on AI-Specific Threats: We go beyond traditional security and address AI-specific concerns like model leakage and prompt injection.

Aligned with Emerging AI Standards: Our assessments are built to reflect current global frameworks and regulatory requirements.

Actionable Risk Remediation Guidance: We provide clear steps to mitigate identified risks without disrupting model performance or availability.

Support for Model Governance and Audit: We help you build secure, auditable AI pipelines aligned with responsible AI principles.

Proven Experience in AI Security Testing: COE Security brings deep offensive and defensive expertise in AI/ML security assessments.

Enabling Trustworthy AI Deployments: We empower you to deploy and scale AI solutions with confidence, compliance, and resilience.

Five areas of AI Security Posture Assessment

AI Runtime Defense Analysis

At COE Security LLC, our AI Runtime Defense Analysis service focuses on protecting AI systems during their operational phase against emerging threats and adversarial attacks. We monitor AI models in real time to detect anomalies, data poisoning, and model evasion tactics that could compromise accuracy or integrity. Utilizing advanced behavioral analytics and threat intelligence, we provide continuous defense tailored to your AI environment. Our proactive approach includes incident detection, mitigation strategies, and compliance alignment to ensure safe and reliable AI deployment. Trust COE Security to safeguard your AI investments and maintain operational resilience in an evolving threat landscape.

Data Leak Prevention Security Operations

At COE Security LLC, our Data Leak Prevention (DLP) Security Operations services are designed to protect sensitive information from unauthorized access and accidental exposure. We implement state-of-the-art monitoring tools, encryption protocols, and access controls to detect and prevent data breaches. Our approach includes real-time risk assessments, policy enforcement, and user activity monitoring to ensure compliance with regulations such as GDPR and HIPAA. With a focus on proactive threat management, we provide tailored solutions to address insider threats, shadow IT risks, and inadvertent data leaks. Partner with COE Security to safeguard your critical data and maintain organizational trust.

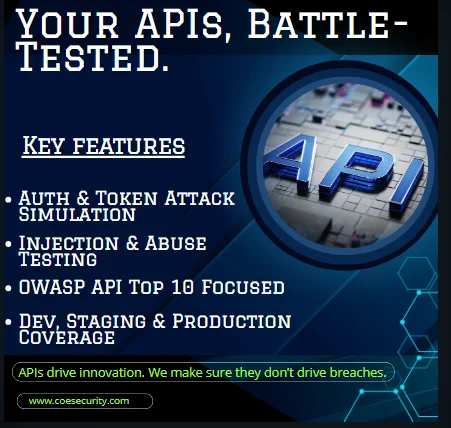

API Penetration Testing

At COE Security LLC, our API Penetration Testing services focus on identifying vulnerabilities in APIs to protect critical data and ensure secure communication between systems. Using advanced testing methodologies, we assess API endpoints for weaknesses such as authentication flaws, improper data validation, and misconfigured permissions. By simulating real-world attack scenarios, we uncover security gaps that could expose sensitive information or disrupt operations. Following the assessment, we deliver detailed reports with actionable insights, empowering you to strengthen API security. Trust COE Security to safeguard your APIs and maintain the integrity and reliability of your digital ecosystem.

AI Ethical Compliance Review

At COE Security LLC, our AI Ethical Compliance Review service ensures that your AI systems operate within ethical and regulatory frameworks. We evaluate your AI models against standards for fairness, transparency, accountability, and privacy, identifying potential biases and compliance gaps. Our experts provide tailored recommendations to align your AI initiatives with global regulations such as GDPR, HIPAA, and emerging AI laws. By implementing ethical risk assessments, governance protocols, and stakeholder guidelines, we help you foster trust and integrity in AI-driven operations. Partner with COE Security to promote ethical AI practices and maintain compliance in a rapidly evolving digital landscape.

App-to-Cloud Vulnerability Management

At COE Security LLC, our App-to-Cloud Vulnerability Management service ensures end-to-end security for applications and their cloud environments. We identify and address vulnerabilities across the entire application lifecycle, including development, deployment, and operation in the cloud. Using advanced scanning tools and threat modeling, we detect misconfigurations, insecure APIs, and compliance gaps. Our approach includes real-time monitoring, remediation strategies, and risk prioritization to minimize exposure and enhance security posture. Trust COE Security to deliver seamless vulnerability management, protecting your applications and cloud infrastructure from evolving cyber threats.

Advanced Offensive Security Solutions

COE Security empowers your organization with on-demand expertise to uncover vulnerabilities, remediate risks, and strengthen your security posture. Our scalable approach enhances agility, enabling you to address current challenges and adapt to future demands without expanding your workforce.

Why Partner With COE Security?

Your trusted ally in uncovering risks, strengthening defenses, and driving innovation securely.

Expert Team

Certified cybersecurity professionals you can trust.

Standards-Based Approach

Testing aligned with OWASP, SANS, and NIST.

Actionable Insights

Clear reports with practical remediation steps.

Our Products Expertise

Information Security Blog

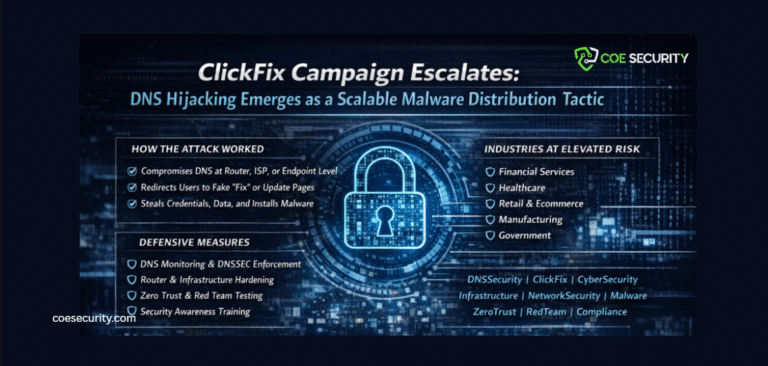

DNS Hijacking Emerges as a Scalable Malware Distribution Tactic

Cyber threats continue to evolve, and the latest ClickFix campaign highlights a…

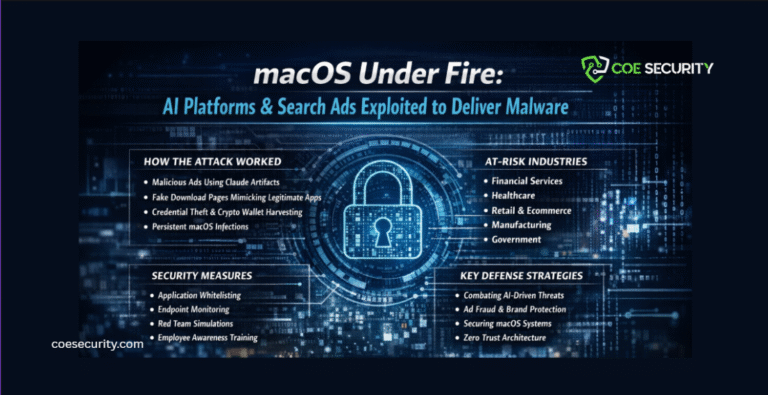

AI Platforms and Search Ads Exploited to Deliver Malware

Cybercriminal tactics are evolving again. A recent campaign uncovered how attackers abused…

serious and unsettling security development

In today’s interconnected enterprise environments, firewalls represent the first and last line…